Hi, I’m trying to implement the Soft Shadows in my macOS Objective-C application. Your sample playground looks gorgeous, but I cannot well understand how to achieve the same effect using a typical geometry passed to the Vertex Shader by drawIndexedPrimitives:.

How should I intercept the ray with the geometry?

And how should I dispatch the threads?

Is a sample code I can learn from?

I would advise exploring acceleration structures. Metal by Tutorials provides an introduction to ray tracing concepts, but doesn’t go so far.

Apple’s recent Metal 3 advances ray tracing on the GPU substantially.

Specifically, start off with the ray tracing WWDC videos from 2021 and 2022. There’s a ton of sample code too, most of it in Objective C.

The sample Accelerating ray tracing and motion blur produces shadows using an obj file.

1 Like

Intel gave us a gift: Path tracing workshop

The first part of this shows how to do Ray-mesh intersection to see if rays hit triangles (rather than spheres and rectangles as described in MbT).

You should still explore Apple’s ray-tracing APIs though, as they will be optimised.

1 Like

Thank you Caroline! This sounds really well. I have already taken a look at the Apple Hybrid Rendering. I will take this workshop and come back here soon. I am very interested in real time ray-tracing, path-tracing and rendering.

1 Like

Hi Caroline,

I’m studying the Apple sample code about Path Tracing. That looks really cool. The code is here

I added a sphere to the scene, a texture to the objects, then I added the specular color effect to the shader. My camera is now movable/zoomable using the trackpad.

-

The Path Tracing is not in real time. It takes 2 or 3 seconds before the image looks well and loses the pixelated effect. And it’s a very simple scene with a few triangles. I can’t use it in an animation at 60 FPS nor 30 FPS while on the web everybody speaks about the real time path tracing as the latest revolution. Should I use a special video-card to achieve a good speed? Actually I’m working on a MacBook Pro 17" late 2019 (Intel) with an AMD Radeon Pro 5500M 4 GB. Is my video-card too slow or am I missing something in the code?

-

The final colours look pale (desaturated) even if I eliminate the tone mapping line of code from the copyFragment shader

// color = color / (1.0f + color);

Also I can’t understand why in the code the

uniforms->light.color is a vector3(4.0f, 4.0f, 4.0f). Why 4.0 and not 1.0 ?

- I added the specular effect modifying the shadeKernel shader. I reused the same specular shader-code I properly used in a Phong shader of my old software. On my old software it works well (look at the ballon here below). On the shadeKernel shader (picture above) it doesn’t looks realistic, no matter the specular and the shininess values I chose.

float3 specularColor = 0;

if(bounce == 0)

{

if(diffuseFactor > 0.01)

{

float shininess = 200.0;

float attenuation = 1.0;

float specularIntensity = 1.0;

float3 V = ray.direction;

float3 R = normalize(reflect(lightDirection, surfaceNormal));

float specularFactor = pow(max(dot(V, R), 0.0), max(shininess, 1.0));

float specularSmooth = smoothstep(1e-3f, 1.0, specularFactor);

specularColor = uniforms.light.color * specularSmooth * attenuation * specularIntensity;

}

}

shadowRay.color = lightColor * color + specularColor;

-

The soft shadows are nice but their focus looks fixed. Instead usually the shadow looks well focused when the distance object-shadow is closer than the distance object-light.

-

The white spot you can see on the front wall looks “oval” and not “squared” as the light shape. It comes from the specular effect. It looks wrong.

-

I can’t yet find the way to add the mirror effect to the objects. Since the surfaces already partially get the colours from the walls aside (left and right) I thought it should be easy to reflect those walls (and any other objects on the scene) at 100%. I guess I misunderstood some concept.

-

As I have seen, the path tracing requires that I load the whole scene (vertex + normals, colours…) to the GPU using the accelerated structure and an intersector. Well. I can’t imagine yet how to rethink my structure of data since I have to pass to the GPU the materials, lights, textures, matrices (to animate the objects and the textures). I thought to use an object ID (to retrieve its mesh ID then its material) but I can’t think to add an object ID to any vertex of the scene. Is a well canonical way to structure the scene-data of my real time animation that well suite the path-tracing scheme?

Thank you for you precious suggestions and pointers.

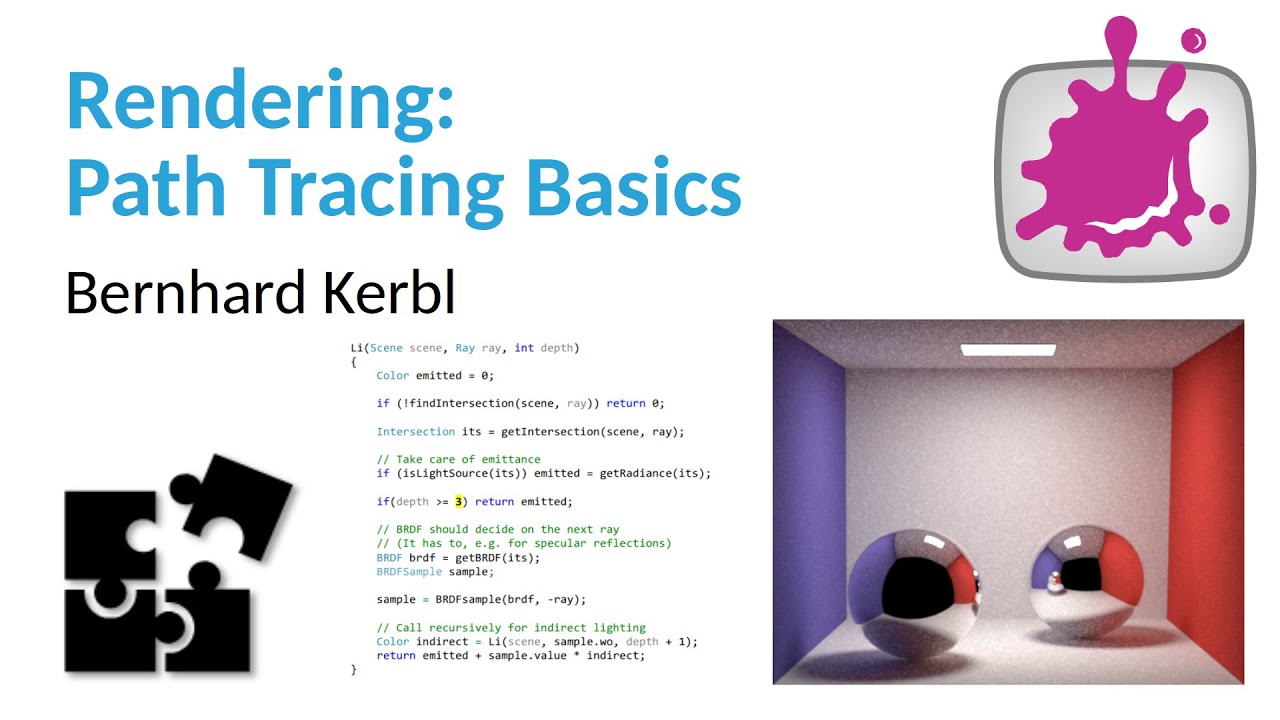

P.S. Actually I have found a fine video-lesson about PathTracing. I write its link here for your convenience.

Hmm I don’t know that I can address these in a reasonable amount of time.

-

I don’t know enough about the most recent advances, but path tracing is enormously complicated, and requires a ton of hardware. NVIDIA has made the most advances in this field, and Apple is either playing catch-up, or coming up with their own hardware solution. (See point 7 re hybrid rendering)

-

It might be to do with your texture being sRGB and not matching the shader? The Apple sample looks fully saturated if I change their color values to being fully red/green.

-

x4 produces more light than x1. Try changing the values to 1.0 and see what you get.

-

- As far as I know, there isn’t any “canonical”. It depends on what you need. Acceleration structures have been used for a while. Could be that hybrid rendering with mesh shaders and ray traced shadows and reflections are the way to go.

For example, this hybrid rendering pipeline from Ray Tracing Gems:

Also see Apple’s talk on Hybrid Rendering: Explore hybrid rendering with Metal ray tracing - WWDC21 - Videos - Apple Developer

Thank you for the link. It looks like a great playlist: https://youtube.com/playlist?list=PLmIqTlJ6KsE2yXzeq02hqCDpOdtj6n6A9

1 Like

If you want ray tracing shadows real time you will have to use the MPS denoiser on hybrid rendering.

this example shows exactly that.

1 Like